Date: 01/01/1970

1969 April 7th ARPANET became the technical core of what would become the Internet, and a primary tool in developing the technologies used. ARPANET development was centered around the Request for Comments (RFC) process, still used today for proposing and distributing Internet Protocols and Systems. RFC 1, entitled “Host Software”, waswritten by Steve Crocker from the University of California, Los Angeles, andpublished on April 7, 1969. These early years were documented in the 1972 film Computer Networks: The Heralds of Resource Sharing.

The Development of the Arpanet

In 1962, the report “On Distributed Communications” by Paul Baran, was published by the Rand Corporation. Baran’s research, done under a grant from the U.S. Air Force, discusses how the U.S. military could protect its communications systems from serious attack. He outlines the principle of “redundancy of connectivity” and explores various models of forming communications systems and evaluating their vulnerability. The report proposes a communications system where there would be no obvious central command and control point, but all surviving points would be able to reestablish contact in the event of an attack on any one point. Thus damage to a part would not destory the whole and its effect on the whole would be minimized. One of his recommendations is for a national public utility to transport computer data, much in the way the telephone system transports voice data. “Is it time now to start thinking about a new and possibly non-existant public utility,” Baran asks, “a common user digital data communication plant designed specifically for the transmission of digital data among a large set of subscribers?” He cautions against limiting the choice of technology for such a data network to that which is currently in use. He proposes that a packet switching, store and forward technology be developed for a data network. However, because some of his research was then classified, it did not get very wide dissemination. Other researchers were interested in computers and communications, particularly in the computer as a communication device. J.C.R. Licklider was one of the most influential. He was particularly interested in the man-computer communication relationship. Lick, as he asked people to call him, wondered how the computer could help humans to think and to solve problems. In an article called “Man Computer Symbiosis”, he explores how the computer could help humans to do intellectual work. Lick was also interested in the question of how the computer could help humans to communicate better. “In a few years men will be able to communicate more effectively through a machine than face to face,” Licklider and Robert Taylor wrote in an article they coauthored. “When minds interact,” they observe, “new ideas emerge.”

People like Paul Baran and J.C.R. Licklider were involved in proposing how to develop computer technology in ways that hadn’t been developed before.

While Baran’s work had been classified, and thus was known only around military circles, Licklider, who had access to such military research and writing, was also involved in the computer research and education community. Larry Roberts, another of the pioneers involved in the early days of network research, explains how Lick’s vision of an Intergalactic Computer Network changed his life and career. Lick’s contribution, Roberts explains, represented the effort to “define the problems and benefits resulting from computer networking.”

After informal conversations with Lick, F. Corbato and A. Perlis, at the Second Congress on Information System Sciences in Hot Springs, Virginia, in November 1964, Larry Roberts “concluded that the most important problem in the computer field before us at the time was computer networking; the ability to access one computer from another easily and economically to permit resource sharing.” Roberts recalls, “That was a topic in which Licklider was very interested and his enthusiasm infected me.”

During the early 1960’s the U.S. military under its Advanced Research Projects Agency (ARPA) established two new funding offices, the Information Processing Technology Office (IPTO) and another for behavioral science. From 1962-64, Licklider took a leave of absence from his position at a Massachusetts research firm, BBN, to give guidance to these two newly created offices. In reviewing this seminal period, Alan Perlis recalls how Lick’s philosophy guided ARPA’s funding of computer science research. Perlis explains, “I think that we all should be grateful to ARPA for not focusing on very specific projects such as workstations. There was no order issued that said, `We want a proposal on a workstation.’ Goodness knows, they would have gotten many of them. Instead, I think that ARPA, through Lick, realized that if you get `n’ good people together to do research on computing, you’re going to illuminate some reasonable fraction of the ways of proceeding because the computer is such a general instrument.” In retrospect Perlis explains, “We owe a great deal to ARPA for not circumscribing directions that people took in those days. I like to believe that the purpose of the military is to support ARPA, and the purpose of ARPA is to support research.”

Licklider confirms that he was guided in his philosophy by the rationale that a broad investigation of a problem was necessary in order to solve that problem. He explains “There’s a lot of reason for adopting a broad delimination rather than a narrow one because if you’re trying to find out where ideas come from, you don’t want to isolate yourself from the areas that they come from.”

Licklider attracted others involved in computer research to his vision that computer networking the most important challenge.

In 1966-67 Lincoln Labs in Lexington, Mass and SDR in Santa Monica, California, got a grant from the DOD to begin research on linking computers across the continent. Larry Roberts, describing this work, explains, “Convinced that it was a worthwhile goal, we set up a test network to see where the problems would be. Since computer time sharing experiments at MIT (CTSS) and Dartmouth (DTSS) had demonstrated that it was possible to link different computer users to a single computer, the cross country experiment built on this advance.”(i.e. Once timesharing was possible, the linking remote computers was also possible.)

Roberts reports that there was no trouble linking dissimilar computers. The problems, he claims, were with the telephone lines across the continent, i.e. that the throughput was inadequate to accomplish their goals. Thus their experiment set the basis for justifying research in setting up a nationwide store and forward packet switching data network.

During this period, ARPA was funding computer research at a number of U.S. Universities and research labs. A decision was made to include research contractors in the experimental network – the Arpanet. A plan was created for a working network to link the 16 research groups together. A plan for the ARPANET was made available at the October 1967 ACM Symposium on Operating Principles in Gatlingberg, Tennessee.

Shortly thereafter, Larry Roberts was recruited to head the ITPO office at ARPA to guide the research. The military set out specifications for the project and asked for bids. They wanted a proposal for a four computer network and a design for a network that would include 17 sites.

The award for the contract went to the Cambridge, Massachusetts firm Bolt Beranek and Newman Inc. (BBN).

The planned network would make use of mini computers to serve as switching nodes for the host computers at sites that were to be connected to the network. Honeywell mini computers (516’s) were chosen for the network of Information Message Processors (IMP’s) that would be linked to each other. And each of the IMP’s would be linked to a host computer. These IMP’s only had 12 kilobytes of memory though they were the most powerful mini computers available at the time.

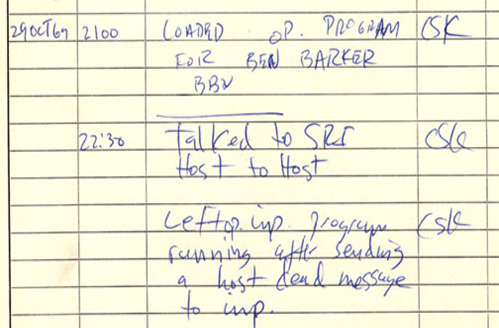

On Sept 1, 1969, the first IMP arrived at UCLA which was to be the first site of the new network. It was connected to the Sigma 7 computer at UCLA. Shortly thereafter IMP’s were delivered to the other three sites in this initial testbed network. At SRI, the IMP was connected to an SDS-940 computer. At UCSB, the IMP was connected to an IBM 360/75. And at the University of Utah, the fourth site, the IMP was connected a DEC PDP-10.

By the end of 1969, the first four IMP’s had been connected to the computers at their individual sites and the network connections between the IMP’s were operational. The researchers and scientists involved could begin to identify the problems they had to solve to develop a working network.

There were programming and technical problems to be solved so the different computers would be able to communicate with each other. Also, there was a need for an agreed upon set of signals that would open up communication channels, allow data to pass thru, and then would close the channels. These agreed upon standards were called protocols. The initial proposal for the research required those involved to work to establish protocols. In April 1969, the first meeting of the group to discuss establishing these protocols took place. They put together a set of documents that would be available to everyone involved for consideration and discussion. They called these Requests for Comment (RFC’s) and the first RFC was April, 1969.

As the problems of setting up the four computer network were identified and solved, the network was expanded to several more sites.

By April 1971, there were 15 nodes and 23 hosts in the network.

These earliest sites attached to the network were connected to Honeywell DDP-516 IMPs. These were:

1 UCLA 2 SRI 3 UCSB 4 U of UTAH 5 BBN 6 MIT 7 RAND Corp 8 SDC ? (Systems Development Corporation) 9 Harvard 10 Lincoln Lab 11 Stanford 12 U of Illinois (Urbana) 13 Case Western Reserve U. 14 CMU 15 NASA-AMES

Then smaller minicomputers, the Honeywell 316, were introduced. They were compatible with the 516 IMP but at half the cost) were connected. Some were configured as TIPs (i.e. Terminal IMPs) beginning with:

16 NASA-AMES TIP 17 MITRE TIP

(Listing of sites based on a post on Usenet, but the Completion Report also lists Burroughs as one of the first 15 sites.)

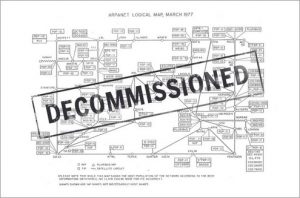

By January 1973, there were 35 nodes of which 15 were TIPs.

Early in 1973, a satellite link connected California with a TIP in Hawaii. With the rapid increase of network traffic, problems were discovered with the reliability of the subnet and corrections had to be worked on. In mid 1973, Norway and England in Europe were added to the net and the resulting problems had to be solved. By September 1973, there were 40 nodes and 45 hosts on the network. And the traffic had expanded from 1 million packets/day in 1972 to 2,900,000 packets/day by September, 1973.

By 1977, there were 111 host computers connected via the Arpanet. By 1983 there were 4000.

As the network was put into operation, the researchers learned which of their original assumptions and models were inaccurate. For example, BBN describes how they had initially failed to understand that the IMP’s would need to do error checking. They explain:

“The first four IMPs were developed and installed on schedule by the end of 1969. No sooner were these IMPs in the field than it became clear that some provision was needed to connect hosts relatively distant from an IMP (i.e., up to 2000 feet instead of the expected 50 feet). Thus in early 1970 a `distant’ IMP/host interface was developed. Augmented simply by heftier line drivers, these distant interfaces made clear for the first time the fallacy in the assumption that had been made that no error control was needed on the host/IMP interface because there would be no errors on such a local connection.”

The network was needed to uncover the actual bugs. In describing the importance of a test network, rather than trying to do the research in a laboratory, Alex McKenzie and David Walden, in their article “Arpanet, the Defense Data Network, and Internet” write:

“Errors in coding control were another problem. However carefully one designs, codes, and performs quality control, errors can still slip through. Fortunately, with a large number of IMPs in the network, most of these errors are found quickly because they occur so frequently. For instance, a bug in an IMP code that occurs once a day in one IMP, occurs every 15 minutes in a 100-IMP network. Unfortunately, some bugs still will remain. If a symptom of a bug is detected somewhere in a 100-IMP network once a week (often enough to be a problem), then it will happen only once every two years in a single IMP in a development lab for a programmer trying to find the source of the symptom. Thus, achieving a totally bug-free network is very difficult.

In October 1972, the First International Conference on Computer Communications was held in Washington, D.C. A public demonstration of the ARPANET was given setting up an actual node with 40 machines. Representatives from projects around the world including Canada, France, Japan, Norway, Sweden, Great Britain and the U.S. discussed the need to begin work on establishing agreed upon protocols. The InterNetwork Working Group (INWG) was created to begin discussions for such a common protocol and Vinton Cerf, who was involved with UCLA Arpanet was chosen as the first Chairman. The vision proposed for the architectural principles for an international interconnection of networks was “a mess of independent, autonomous networks interconnected by gateways, just as independent circuits of ARPANET are interconnected by IMPs.”

The network continued to grow and expand.

In 1975 the ARPANET was transferred to the control of the DCA (Defense Communications Agency).

Evaluating the success of ARPANET research, Licklider recalled that he felt ARPA had been run by an enlightened set of military men while he was involved with it. “I don’t want to brag about ARPA,” he explains, ” It is in my view, however, a very enlightened place. It was fun to work there. I think I never encountered brighter, more creative people, than the inhabitants of the third floor E-ring of the Pentagon. But that, I’ll say, was a long time ago, and I simply don’t know how bright and likeable they are now. But ARPA didn’t constrain me much.”

A post on Usenet by Eugene Miya, who was a student at one of the early Arpa sites, conveys the exciting environment of the early Arpanet. He writes:

“It was an effort to connect different kinds of computers back when a school or company had only one (that’s 1) computer. The first configuration of the ARPAnet had only 4 computers, I had luckily selected a school at one of those 4 sites: UCLA/Rand Corp, UCSB (us), SRI, and the U of Utah.

Who? The US DOD: Defense Department’s Advanced Research Projects Agency. ARPA was the sugar daddy of computer science. Some very bright people were given some money, freedom, and had a lot of vision. It not only started computer networks, but also computer graphics, computer flight simulation, head mounted displays, parallel processing, queuing models, VLSI, and a host of other ideas. Far from being evil warmongers, some neat work was done.

Why? Lots of reasons: intellectual curiosity, the need to have different machines communicate, study fault tolerance of communications systems in the event of nuclear war, share and connect expensive resources, very soft ideas to very hard ideas.

I first saw the term “internetwork” in a paper by folk from Xerox PARC (another ARPANET host). The issue was one of interconnecting Ethernets (which had the 256 [slightly less] host limitation). Schoch’s CACM worm program paper is a good one.

I learned much of this with the help of the NIC (Network Information Center). This does not mean the Internet is like this today. I think the early ARPAnet was kind of a wondrous neat place, sort of a golden era. You could get into other people’s machines with a minimum of hassle (someone else paid the bills). No more.

He continues:

Where did I fit in? I was a frosh nuclear engineering major, spending odd hours (2am-4am, sometimes on Fridays and weekends) doing hackerish things rather than doing student things: studying or dating, etc. I put together an interactive SPSS and learned a lot playing chess on an MIT[-MC] DEC-10 from an IBM-360. Think of the problems: 32-bit versus 36-bit, different character set [remember I started with EBCDIC], FTP then is largely FTP now, has changed very little. We didn’t have text editors available to students on the IBM (yes you could use the ARPAnet via punched card decks). Learned a lot. I wish I had hacked more.

One of the surprising developments to the researchers of the ARPANET was the great popularity of electronic mail. Analyzing the reasons for this unanticipated benefit from their network development, Licklider and Vezza write, “By the fall of 1973, the great effectiveness and convenience of such fast, informed messages services�had been discovered by almost everyone who had worked on the development of the ARPANET – and especially by the then Director of ARPA, S.J. Lukasik, who soon had most of his office directors and program managers communicating with him and with their colleagues and their contractors via the network. Thereafter, both the number of (intercommunicating) electronic mail systems and the number of users of them on the ARPANET increased rapidly.”

“One of the advantages of the message system over letter mail,” they add, “was that, in an ARPANET message, one could write tersely and type imperfectly, even to an older person in a superior position and even to a person one did not know very well, and the recipient took no offense. The formality and perfection that most people expect in a typed letter did not become associated with network messages, probably because the network was so much faster, so much more like the telephone � Among the advantages of the network message services over the telephone were the fact that one could proceed immediately to the point without having to engage in small talk first, that the message services produced a preservable record, and that the sender and receiver did not have to be available at the same time.

Describing email, the authors of the Completion Report write:

The largest single surprise of the ARPANET program has been the incredible popularity and success of network mail. There is little doubt that the techniques of network mail developed in connection with the ARPANET program are going to sweep the country and drastically change the techniques used for intercommunication in the public and private sectors.

Not only was the network used to see what the actual problems would be, the communication it made possible gave the researchers the ability to collaborate to deal with these problems.

Summarizing the important breakthrough represented by the Arpanet, they conclude:

“This ARPA program has created no less than a revolution in computer technology and has been one of the most successful projects ever undertaken by ARPA. The program has initiated extensive changes in the Defense Department’s use of computers as well as in the use of computers by the entire public and private sectors, both in the United States and around the world.

Just as the telephone, the telegraph, and the printing press had far-reaching effects on human intercommunication, the widespread utilization of computer networks which has been catalyzed by the ARPANET project represents a similarly far-reaching change in the use of computers by mankind.