On October 27, 1986, NEC Corporation registered the nec.com domain name, making it 30th .com domain ever to be registered.

NEC Corporation is a Japanese multinational provider of information technology (IT) services and products, headquartered in Minato, Tokyo, Japan. NEC provides IT and network solutions to business enterprises, communications services providers and to government agencies, and has also been the biggest PC vendor in Japan since the 1980s. The company was known as the Nippon Electric Company, Limited, before rebranding in 1983 as just NEC. Its NEC Semiconductors business unit was one of the worldwide top 20 semiconductor sales leaders before merging with Renesas Electronics. NEC is a member of the Sumitomo Group.

Company History

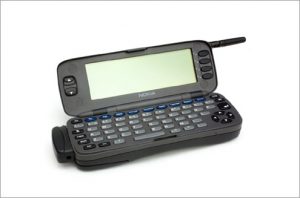

NEC Corporation is one of the world’s leading makers of computers, computer peripherals, and telecommunications equipment and owns majority control of NEC Electronics Corporation, one of the leading semiconductor makers in the world. NEC is considered one of Japan’s sogo denki, or general electric companies, a group that is typically said to also include Fujitsu Limited; Hitachi, Ltd.; Mitsubishi Electric Corporation; and Toshiba Corporation. Like the other members of the Japanese high-tech “Big Five,” NEC was hit hard at the turn of the millennium by a global downturn in demand in the corporate sector for electronics products. NEC has subsequently been undertaking an ongoing and massive restructuring, including an increasing emphasis on systems integration services, software, and Internet-related services. These operations are housed within the company’s IT Solutions business segment, which also includes mainframe computers, network servers, supercomputers, workstations, and computer peripherals. The Networking Solutions segment comprises optical, broadband, and wireless networking equipment and services as well as cellular phones and other communications devices. Computers, printers and other peripheral devices, Internet services, and network equipment aimed at the consumer market are handled through the Personal Solutions segment. About 17 percent of NEC’s net sales originate outside of Japan.

Early History Involving Western Electric Company

The Nippon Electric Company, Limited, as NEC Corporation was originally known, was first organized in 1899 as a limited partnership between Japanese investors and the Western Electric Company. Western Electric recognized that Japan, which was undergoing an ambitious industrialization, would soon be building a telephone network. With a solid monopoly in North America as the manufacturing arm of the Bell system, Western Electric sought to establish a strong market presence in Japan, as it had done in Europe. NEC went public the following year, with Western Electric a 54 percent owner. In need of a plant, NEC took over the Miyoshi Electrical Manufacturing Company in central Tokyo.

Under the management of Kunihiko Iwadare and with substantial direction from Western Electric, NEC was at first little more than a distributor of imported telephone equipment from Western Electric and General Electric. Iwadare, however, set NEC to producing magneto-type telephone sets and secured substantial orders from the Ministry of Communications for the government-sponsored telephone-network-expansion program. With steadily increasing, and guaranteed, business from the government, NEC was able to plan further expansion. In September 1900 NEC purchased from Mitsui a site at Mita Shikokumachi, where a second NEC factory was completed in December 1902.

In an attempt to heighten NEC’s competitiveness with rival Oki Shokai, Iwadare ordered his apprentices at Western Electric to study that company’s accounting and production-control systems. Takeshiro Maeda, a former Ministry of Communications official, recommended that NEC emphasize the consumer market, because he regarded the government sales as uncompetitive and limited. Still, government sales were the company’s major vehicle for growth, particularly with Japan’s expansion into Manchuria after the 1904-05 Russo-Japanese War.

Japan’s Ministry of Communications engineered an aggressive telecommunications program, linking the islands of Japan with commercial, military, and government offices in Korea and Manchuria. As was Bell in the United States, NEC was permitted a “natural,” though imperfect, monopoly over cable communications in Japan and its territories. NEC opened offices in Seoul in 1908 and Port Arthur (now Lüshun), China, in 1909.

A serious economic recession in Japan in 1913 forced the government to retrench sponsorship of its second telephone expansion program. Struggling to survive, NEC quickly turned back to importing–this time of such household appliances as the electric fan, a device never seen before in Japan. As quickly as it had fallen, the Japanese economy recovered in 1916, and the expansion program was placed back on schedule. Intelligent planning effectively insulated NEC from the effects of a second serious recession in 1922; NEC even continued to grow during that time. In the meantime, Western Electric’s stake in NEC was transferred in 1918 to the company’s international division, International Western Electric Company, Incorporated (IWE).

Relationship with Sumitomo Beginning in 1920s

In the early 1920s, IWE wanted to create a joint venture with NEC to produce electrical cables. NEC, however, lacked the industrial capacity to be an equal partner, and recommended the inclusion of a third party, Sumitomo Densen Seizosho, the cable-manufacturing division of the Sumitomo group. A three-way agreement was concluded, marking the beginning of an important role for Sumitomo in NEC’s operations.

On September 1, 1923, a violent earthquake severely damaged Tokyo and Yokohama, killing 140,000 people and leaving 3.4 million homeless. The Great Kanto Earthquake also destroyed four NEC factories and 80,000 telephone sets. Still, the government maintained its commitment to a modern telephone network and supported NEC’s development of automatic switching devices.

NEC began to work on radios and transmitting devices in 1924. As with the telephone project, the Japanese government sponsored the establishment of a radio network, the Nippon Hoso Kyokai, which began operation with Western Electric equipment from NEC. By May 1930, however, NEC had built its own transmitter, a 500-watt station at Okayama.

In 1925 American Telephone & Telegraph sold International Western Electric to International Telephone & Telegraph, which renamed the division International Standard Electric Corporation (ISE). Partially as a result, Yasujiro Niwa, a director who had joined NEC in 1924, felt NEC should lessen its dependence on technologies developed by foreign affiliates. In order to strengthen NEC’s research and development, Niwa inaugurated a policy of recruiting the best graduates from top universities. By 1928 NEC engineers had completed their own wire photo device.

The Japanese economy, which had been in a slump since 1927, fell into crisis after the Wall Street crash of 1929. With a rapidly contracting economy, the government was forced year after year to scale back its telecommunications projects. While it restricted imports of electrical equipment, the government also encouraged greater competition in the domestic market. Decreased subsidization and a shrinking market share reversed many of NEC’s gains during the previous decade.

The deployment of Japanese troops in Manchuria in 1931 created a strong wave of nationalism in Japan. Legislation was passed that forced ISE to transfer about 15 percent of its ownership in NEC to Sumitomo Densen. Under the directorship of Sumitomo’s Takesaburo Akiyama (Iwadare had retired in 1929), NEC began to work more closely with the Japanese military. A right-wing officers corps was at the time successfully engineering a rise to power and diverting money to military and industrial projects, particularly after Japan’s declaration of war against China in 1937. NEC’s sales grew by seven times between 1931 and 1937, and by 1938 the company’s Mita and Tamagawa plants had been placed under military control.

Under pressure from the militarists, ISE was obliged to transfer a second block of NEC shares to Sumitomo; by 1941, ISE’s stake had fallen to 19.7 percent. Later that year, however, when Japan went to war against the Allied powers, ISE’s remaining share of NEC was confiscated as enemy property.

During the war, NEC worked on microwave communications and radar and sonar systems for the army and navy. The company took control of its prewar Chinese affiliate, China Electric, as well as a Javanese radio-research facility belonging to the Dutch East Indies Post, Telegraph and Telephone Service. In February 1943, Sumitomo took full control of NEC and renamed it Sumitomo Communication Industries. The newly named company’s production centers were removed to 15 different locations to minimize damage from American bombings. Despite this, Sumitomo Communication’s major plants at Ueno, Okayama, and Tamagawa were destroyed during the spring of 1945; by the end of the war in August, the company had ceased production altogether.

Struggling to Recover Following World War II

The Allied occupation authority ordered the dissolution of Japan’s giant zaibatsu (conglomerate) enterprises such as Sumitomo in November that year. Sumitomo Communications elected to readopt the name Nippon Electric, and ownership of the company reverted to a government liquidation corporation. At the same time, the authority ordered a purge of industrialists who had cooperated with the military during the war, and Takeshi Kajii, wartime president of NEC, was removed from the company.

NEC’s new president, Toshihide Watanabe, faced the nearly impossible task of rehabilitating a company paralyzed by war damage, with 27,000 employees and no demand for its products. Although it was helped by the mass resignation of 12,000 workers, NEC was soon constrained by new labor legislation sponsored by the occupation authority. This legislation resulted in the formation of a powerful labor union that frequently came into conflict with NEC management. Although NEC was able to open its major factories by January 1946, workers demanding higher wages went on strike for 45 days only 18 months later.

The Japanese government helped NEC and other companies to remain viable through the award of public works projects. Uneasy about becoming dependent on these programs, however, Watanabe ordered the reapplication of NEC’s military technologies for commercial use. Submarine sonar equipment was thus converted into fish detectors, and military two-way radios were redesigned into all-band commercial radio receivers.

Still, NEC fell drastically short of its postwar recovery goals. In April 1949 the company closed its Ogaki, Seto, and Takasaki plants and its laboratory at Ikuta, and laid off 2,700 employees. The union responded by striking, yielding only after 106 days.

Next on Watanabe’s agenda was the establishment of patent protection for NEC’s technologies. During the war, all patented designs had become a “common national asset”–in the public domain. Eager to reestablish its link with ISE, NEC needed first to ensure that both companies’ technologies would be legally protected. This accomplished, NEC and ISE signed new cooperative agreements in July 1950.

Diversifying and Expanding Internationally in the 1950s and 1960s

With Japan’s new strategic importance in light of the Korean War, and with the advent of commercial radio broadcasting and subsequent telephone expansions, NEC had several new opportunities for growth. The company made great progress in television and microwave communication technologies and in 1953 created a separate consumer-appliance subsidiary called the New Nippon Electric Company. The company had begun research and development on transistors in 1950; it entered the computer field four years later, and in 1960 began developing integrated circuits. By 1956 NEC had diversified so successfully that a major reorganization became necessary and additional plant space in Sagamihara and Fuchu was put on line. NEC also established foreign offices in Taiwan, India, and Thailand in 1961. Watanabe, believing that NEC should more aggressively establish an international reputation, created a marketing subsidiary in the United States in 1963 called Nippon Electric New York, Inc. (which later became NEC America, Inc.). In addition, the company changed its logo, dropping the simple igeta diamond and “NEC” for a more distinctive script. In November of the following year, Watanabe resigned as president and became chairman of the board.

The company’s new president, Koji Kobayashi, took office with the realization that because the Japanese telephone market would soon become saturated, NEC would have to diversify more aggressively into new and peripheral electronics product lines to maintain its high growth rate. In preparation for this, he introduced modern management methods, including a zero-defects quality-control policy, a concept borrowed from the Martin Aircraft Company. Over the next two years, Kobayashi split NEC’s five divisions into 14, paving the way for a more decentralized management system that gave individual division heads greater autonomy and responsibility. With the continued introduction of more advanced television-broadcasting equipment and telephone switching devices, and taking advantage of the stronger position Watanabe and Kobayashi had created, NEC opened factories in Mexico and Brazil in 1968, Australia in 1969, and Korea in 1970. Affiliates were opened in Iran in 1971 and Malaysia in 1973. With a diminishing need for technical-assistance programs, NEC moved toward greater independence from ITT. That company’s interest in NEC (held through ISE) was reduced to 9.3 percent by 1970, and eliminated completely by 1978. Similarly, NEC shares retained after the war by Sumitomo-affiliated companies were gradually sold off, an action that reduced the Sumitomo group’s interest in NEC from 38 percent in 1961 to 28 percent in 1982.

NEC’s competitive advantage in labor costs eroded continually from the mid-1960s, when worker scarcity became apparent, until the early 1980s. This, together with President Richard Nixon’s decision to remove the U.S. dollar from the gold standard in 1971 and the effects of the Arab oil embargo of 1973, profoundly compromised NEC’s competitive standing. The company was forced into a seven-month retrenchment program in 1974, losing precious momentum in its competition with European and American firms.

Pursuit of C&C Vision Beginning in Late 1970s

In an effort to promote Japanese electronics companies, the Japanese government pushed through a series of partnership agreements among the Big Six computer makers: NEC, Fujitsu, Hitachi, Mitsubishi, Oki, and Toshiba. NEC and Toshiba formed a joint venture, which gave both companies an opportunity to pool their resources and eliminate redundant research. However, a subsequent attempt by NEC to enter the personal computer market failed miserably. Still, NEC, choosing to work with Honeywell instead of building IBM compatibles, invested heavily in its computer operations.

Later in the 1970s, NEC’s computer activities suffered from the fall of Honeywell’s computer fortunes. NEC recovered by relying more on its ability to develop systems in-house. The company was further spurred on by the visionary Kobayashi’s concept of “C&C,” his prediction of the future integration of computers and communications. This prescient vision, which was initially scoffed at, was first announced by NEC at INTELCOM 77. By 1984 NEC had sold more than one million personal computers in Japan. By 1990 the company, whose Japanese personal computers used a proprietary NEC operating system, held a commanding 56 percent share of the Japanese market, as well as a top five position in the United States, where it sold PC clones.

Kobayashi, in the meantime, was promoted to chairman and CEO, and succeeded as president first by Tadao Tanaka in 1976, and then Tadahiro Sekimoto in 1980. Under Kobayashi and Tanaka, NEC tripled its sales volume in the ten years to 1980. A greater proportion of those sales than ever before was derived from foreign markets, and between 1981 and 1983 NEC’s stock was listed on several European stock exchanges. In 1982 an NEC plant in Scotland began to manufacture memory devices, then in 1987 NEC Technologies (UK) Ltd. was established in the United Kingdom to manufacture printers and other products for the European market. In 1984 NEC, Honeywell, and France’s Groupe Bull entered into an agreement involving the manufacture and distribution of NEC mainframe computers; the deal also provided for cross-licensing of patents and copyrights among the three companies. One year earlier, Nippon Electric changed its English-language name to NEC Corporation.

Meanwhile, in the United States NEC formed NEC Electronics, Inc. in 1981 to be the company’s manufacturing and marketing arm for semiconductors in the United States. This subsidiary in 1984 opened a $100 million plant in Roseville, California, to manufacture electron devices. In 1989 another U.S. subsidiary, NEC Technologies, Inc., was established to handle the company’s computer peripheral operations in the United States.

Increased International Profile in the Early to Mid-1990s

By 1989, NEC’s sales had reached ¥3.13 trillion ($21.3 billion). The company’s focus on C&C had led it to top five positions in computer chips, computers, and telecommunications equipment. Like IBM, NEC was also vertically integrated, which added to its strength. Although NEC, like other Japanese electronics computers, suffered from the Japanese recession and strong yen of the early 1990s and from increased competition in Japan from U.S. companies, its aggressive pursuit of overseas opportunities helped the company maintain its leading and varied positions.

In Europe, NEC began selling its IBM-compatible PowerMate line in 1991. In late 1993 the relationship between NEC and Groupe Bull was strengthened with an additional NEC investment of ¥7 billion ($64.5 million) in the troubled state-owned computer manufacturer. By 1996 NEC had a 17 percent stake in Groupe Bull. In 1995 NEC spent $170 million to gain a 19.9 percent stake in Packard Bell Electronics, Inc., the leading U.S. marketer of home computers. In February 1996, NEC, Groupe Bull, and Packard Bell entered into a complex three-way arrangement. NEC invested an additional $283 million in Packard Bell, while Packard Bell acquired the assets of Groupe Bull’s PC subsidiary, Zenith Data Systems. In June of that same year, NEC and Packard Bell merged their PC businesses outside of China and Japan into a new firm called Packard Bell NEC Inc., with NEC investing another $300 million for a larger stake in Packard Bell. Packard Bell NEC immediately became the world’s fourth largest PC maker, trailing only Compaq, IBM, and Apple.

As the 1990s progressed, NEC increasingly looked to parts of Asia outside Japan for manufacturing and sales opportunities, particularly in semiconductors, transmission systems, cellular phones, and PCs. During fiscal 1996, for example, NEC entered into several joint ventures in China for the production and marketing of PBXs, PCs, and digital microwave communications systems and in Indonesia for the manufacture of semiconductors. In May 1997 NEC took a 30 percent stake in a $1 billion joint venture to construct the largest semiconductor factory in China.

In 1994 NEC announced the development of the SX-4 series of supercomputers, touted as the world’s fastest. U.S.-based competitor Cray Research Inc. later filed a complaint with the U.S. Commerce Department accusing NEC of dumping the series in the U.S. market. The Commerce Department in March 1997 ruled in Cray Research’s favor and imposed a 454 percent tariff on NEC’s supercomputers. NEC’s subsequent appeals of this ruling failed.

By 1996, Sekimoto had become chairman of NEC and Hisashi Kaneko was serving as president (Kobayashi died in 1996, when he still held the post of honorary chairman). During the 1990s, these executives had led NEC to increase the share of its sales derived outside Japan from 20 percent in 1990 to 28 percent in 1996. Nonetheless, NEC also kept its sights on its home market; NEC’s share of the domestic PC market had fallen to about 50 percent by 1996, leading to a plan to sell IBM-compatible computers in Japan for the first time. In October 1997 NEC began selling PCs in Japan with Intel microprocessors and the Windows 98 operating system. The move to belatedly adopt what had become the international PC standard was made to support NEC’s drive to increase its share of the global PC market.

Struggling and Restructuring: Late 1990s and Early 2000s

Through additional investments of $285 million in 1997 and $225 million in 1998 NEC gained majority control of Packard Bell NEC, which became a subsidiary of the Japanese firm. NEC and Groupe Bull had now infused more than $2 billion into Packard Bell, but the U.S. firm continued to hemorrhage, posting losses of more than $1 billion in 1997 and 1998. The company and its U.S.-based manufacturing simply could not compete with lower-cost contract manufacturers based in Asia and elsewhere. Late in 1999, with Packard Bell NEC on its way to posting another loss, NEC pulled the plug. Packard Bell’s California plant was closed, and NEC decided to abandon the retail PC market in the United States. The Packard Bell brand disappeared from the U.S. scene. NEC began focusing its U.S. PC efforts on the corporate market, where it sold computers under the NEC brand.

Meanwhile, NEC was being buffeted by a host of additional problems. The prolonged economic downturn in Japan depressed demand for high-tech products, including personal computers and consumer electronics. At the same time, fierce international competition among both electronics and chip makers was cutting drastically into profit margins on consumer electronics and semiconductors. Compounding matters was the economic crisis that erupted in Asia in mid-1997. As a result, net income for fiscal 1998 plummeted 55 percent, and the following year NEC–further battered by a sharp increase in the value of the yen–fell into the red, posting a net loss of ¥157.9 billion ($1.34 billion), its largest loss to that time. In October 1998, in the midst of the latter year, Sekimoto resigned from the chairmanship following the revelation of NEC’s involvement in a defense procurement scandal. Executives of a partly owned NEC subsidiary were charged with overbilling Japan’s Defense Agency and with bribing officials at the agency to gain business. The executives were later convicted.

At the end of 1999, Hajime Sasaki took over as NEC chairman, and Koji Nishigaki replaced Kaneko as president. Reflecting the profound changes that were needed to turn the company’s fortunes around, Sasaki was the first chairman to have come from NEC’s semiconductor side, rather than the telecommunications operations, and Nishigaki was the first president to come from the computer-systems divisions as well as the first with a background in marketing rather than engineering. The new managers recognized that they would have to make fundamental changes to the way NEC operated.

To improve profitability, they almost immediately announced that the workforce would be reduced by 10 percent, or 15,000 positions, with 6,000 workers laid off overseas and 9,000 job cuts in Japan coming through attrition (layoffs still being anathema in that society). They began a debt-reduction program to improve NEC’s financial structure, which in early 1999 was weighed down by ¥2.38 trillion ($20.13 billion) in liabilities. NEC also shifted its focus from hardware to the Internet and Internet-related software and services, building on its ownership of Biglobe, one of the leading Internet service providers in Japan, boasting 2.7 million members in late 1999, three years after the service’s launch. To support this shift, NEC in April 2000 reorganized its operations into three autonomous in-house companies based on customers and markets served: NEC Solutions, providing Internet solutions for corporate customers and individuals; NEC Networks, focusing on Internet solutions for network service providers; and NEC Electron Devices, supplying device solutions for manufacturers of Internet-related hardware. Like other sogo denki a traditionally go-it-alone company, NEC began aggressively pursuing joint ventures with its competitors to spread the costs and risks of developing new products. In one of the first such ventures, NEC joined with Hitachi to form Elpida Memory, Inc., to make the dynamic random-access memory chips (DRAMs) used in personal computers. Ventures were also formed with Mitsubishi Electric in the area of display monitors and with Toshiba in space systems. NEC’s deemphasis of manufacturing also led to the closure of a number of plants located outside of Japan.

Although these and other initiatives helped NEC return to profitability in fiscal 2000 and 2001, the global downturn in the information technology sector, coupled with heightened competition from China and other low-cost countries and the economic fallout from the terrorist attacks of 9/11, sent the company deep into the red again in fiscal 2002; a net loss of ¥312 billion ($2.35 billion) was reported, reflecting ¥370.47 billion ($2.79 billion) in restructuring and other charges. The semiconductor sector suffered the deepest falloff, with the prices of certain commodity chips plunging by nearly 90 percent. In response, NEC Electron Devices closed down a number of plants and eliminated 4,000 jobs, including 2,500 in Japan. An additional 14,000 job cuts were announced in early 2002, along with additional plant closings and the elimination of certain noncore product lines.

In a further cutback of company assets and in an attempt to raise cash for other initiatives, NEC began taking some of its subsidiaries public. Both NEC Soft, Ltd., a developer of software, and NEC Machinery Corporation, a producer of semiconductor manufacturing machinery and factory automation systems, were taken public in 2000. In February 2002 NEC sold about a one-third interest in NEC Mobiling, Ltd., a distributor of mobile phones and developer of software for mobile and wireless communications network systems, to the public. Later that year, a similar one-third interest was sold to the public in NEC Fielding, Ltd., a provider of maintenance services for computers and computer peripheral products. NEC’s most radical such maneuver involved its troubled semiconductor business. In November 2002 all of NEC’s semiconductor operations, except for the DRAM business now residing within Elpida Memory, were placed into a separately operating subsidiary called NEC Electronics Corporation. NEC then reduced its stake in the newly formed company to 70 percent through a July 2003 IPO that raised ¥155.4 billion ($1.31 billion).

Restructuring efforts continued in 2003 under the new leadership of Akinobu Kanasugi, who took over as president from Nishigaki, named vice-chairman. Kanasugi had previously been in charge of NEC Solutions. Concurrent with the appointment of the new president, NEC replaced its in-house company structure with a business line structure, with NEC Solutions evolving into an IT Solutions segment (comprising systems integration services, software, and Internet-related services as well as computers and peripherals) and NEC Networks becoming Network Solutions (comprising network integration services as well as telecommunications and broadband Internet equipment). The eventual goal was to merge the information technology and networking groups in order to offer fully integrated “total” IT/networking/telecommunications solutions encompassing software, operational and maintenance services, and equipment. NEC also established a Personal Solutions group to offer a full range of products and services, including Biglobe, to consumers. The transformation of NEC was far from complete, and its success uncertain–the firm having posted its second straight net loss during fiscal 2003–but the company’s restructuring efforts were as aggressive as, if not more aggressive than, those of the other big Japanese electronics firms. NEC seemed determined to remain among the world’s high-tech leaders.