It is estimated that in 1993 the Internet carried only 1% of the information flowing through two-way telecommunication, by 2000 this figure had grown to 51%, and by 2007 more than 97% of all telecommunicated information was carried over the Internet.

WHAT IS THE INTERNET ?

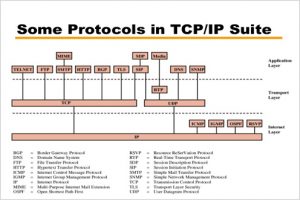

The Internet is a worldwide system of interconnected computer networks that use the TCP/IP set of network protocols to reach billions of users. The Internet began as a U.S Department of Defense network to link scientists and university professors around the world. A network of networks, today, the Internet serves as a global data communications system that links millions of private, public, academic and business networks via an international telecommunications backbone that consists of various electronic and optical networking technologies. The terms “Internet” and “World Wide Web” are often used interchangeably; however, the Internet and World Wide Web are not one and the same.

THE INFLUENCE AND IMPACT OF THE INTERNET

The influence of the Internet on society is almost impossible to summarize properly because it is so all-encompassing. Though much of the world, unfortunately, still does not have Internet access, the influence that it has had on the lives of people living in developed countries with readily available Internet access is great and affects just about every aspect of life. To look at it in the most general of terms, the Internet has definitely made many aspects of modern life much more convenient. From paying bills and buying clothes to researching and learning new things, from keeping in contact with people to meeting new people, all of these things have become much more convenient thanks to the Internet. Communication has also been made easier with the Internet opening up easier ways to not only keep in touch with the people you know, but to meet new people and network as well. The Internet and programs like Skype have made the international phone industry almost obsolete by providing everyone with Internet access the ability to talk to people all around the world for free instead of paying to talk via landlines. Social networking sites such as Facebook, Twitter, YouTube and LinkedIn have also contributed to a social revolution that allows people to share their lives and everyday actions and thoughts with millions.

The Internet has also turned into big business and has created a completely new marketplace that did not exist before it. There are many people today that make a living off the Internet, and some of the biggest corporations in the world like Google, Yahoo and EBay have the Internet to thank for their success. Business practices have also changed drastically thanks to the Internet. Off-shoring and outsourcing have become industry standards thanks to the Internet allowing people to work together from different parts of the world remotely without having to be in the same office or even city to cooperate effectively. All this only scratches the surface when talking about the Internet’s impact on the world today, and to say that it has greatly influenced changes in modern society would still be an understatement.

THE FUTURE: INTERNET2 AND NEXT GENERATION NETWORKS

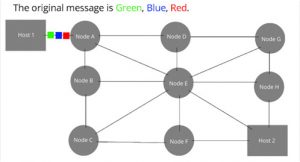

The public Internet was not initially designed to handle massive quantities of data flowing through millions of networks. In response to this problem, experimental national research networks (NRN’s), such as Internet2 and NGI (Next Generation Internet), are developing high speed, next generation networks. In the United States, Internet2 is the foremost non for profit advanced networking consortium led by over 200 universities in cooperation with 70 leading corporations, 50 international partners and 45 non profit and government agencies. The Internet2 community is actively engaged in developing and testing new network technologies that are critical to the future progress of the Internet. Internet2 operates the Internet2 Network, a next-generation hybrid optical and packet network that furnishes a 100Gbps network backbone, providing the U.S research and education community with a nationwide dynamic, robust and cost effective network that satisfies their bandwidth intensive requirements. Although this private network does not replace the Internet, it does provide an environment in which cutting edge technologies can be developed that may eventually migrate to the public Internet. Internet2 research groups are developing and implementing new technologies such as Ipv6, multicasting and quality of service (QoS) that will enable revolutionary Internet applications.

New quality of service (QoS) technologies, for instance, would allow the Internet to provide different levels of service, depending on the type of data being transmitted. Different types of data packets could receive different levels of priority as they travel over a network. For example, packets for an application such as videoconferencing, which require simultaneous delivery, would be assigned higher priority than e-mail messages. However, advocates of net neutrality argue that data discrimination could lead to a tiered service model being imposed on the Internet by telecom companies that would undermine Internet freedoms. More than just a faster web, these new technologies will enable completely new advanced applications for distributed computation, digital libraries, virtual laboratories, distance learning and tele-immersion. As next generation Internet development continues to push the boundaries of what’s possible, the existing Internet is also being enhanced to provide higher transmission speeds, increased security and different levels of service.

The incredible growth of the Internet since 2000

Worldwide Internet users, 2000 and 2010

First off, the one thing you probably wanted to know right away. Here is how much the Internet has grown since the year 2000.

There were only 361 million Internet users in 2000, in the entire world. For perspective, that’s barely two-thirds of the size of Facebook today.

The chart really says it all. There are more than five times as many Internet users now as there were in 2000. And as has been noted elsewhere, the number of Internet users in the world is now close to passing two billion and may do so before the end of this year.

The Internet hasn’t just become larger, it’s also become more spread out, more global.

In 2000, the top 10 countries accounted for 73% of all Internet users.

In 2010, that number has decreased to 60%.

This becomes evident when viewing the distribution of Internet users for the top 50 countries in 2000 and in 2010. Note how much “thicker” the tail of the 2010 graph is.

Thanks to this growth, there are now many more countries with a significant presence on the Internet. Here’s another way to see how much things have changed:

Internet users by world region, 2000 and 2010

Now that we’ve established that the number of Internet users is more than five times as large as it was in 2000, how has that growth been distributed through the different regions of the world?

Back in 2000, Asia, North America and Europe were almost on an even footing in terms of Internet users. Now in 2010, the picture is a very different one. Asia has pulled away as the single largest region, followed by Europe, then by North America, and a significant distance exists between the three.

It’s also highly notable how the number of Internet users in Africa has increased. In 2000, the entire continent of Africa had just 4.5 million Internet users. In 2010 that has grown to more than 100 million.

History of the Internet – 2000 and Beyond

2000: After a bitter antitrust lawsuit, Microsoft is ordered to break into two separate businesses focusing on its highly successful Windows operating system and its other numerous software applications. 2000 would go down in Internet history as the rise and burst of the internet bubble. That year, the presence of internet consumer companies was widespread and visible in everyday life, including the visibility of .dotcom companies which paying millions of dollars for half-minute advertisements to air during the Super Bowl. When investors heard reports that Microsoft would be unable to settle its antitrust lawsuit with the government, the Dow Jones Industrial Average suffers the biggest one-day drop in its history up to that point. Microsoft will settle its lawsuit in 2001 allowing it to remain a single company, but in 2000, the internet revolution marches on with Googles rise to become the worlds largest search engine and the first official instance of internet voting taking places in the United States during a Democratic party primary in Arizona.

2002: Napster is shut down after it fails to win a lawsuit brought by the Recording Industry Association of America.

2003: The rise of spam; unsolicited junk mail messages begin to account for over half of all e-mail messages sent and received. Though the US Congress passes anti-spam legislation, the scourge of unsolicited email remains.

What was it like to be on the Internet in the 2000s?

Since we are still in the 2000’s and the first decade of them only ended 5 years ago I will assume you mean the earlier part of the decade from 2000-2005.

The first bubble had already popped so the first round of b/s start-ups just ripping each other off had been cleansed. Lots of services were just suddenly gone. This informs my knowledge now, I know for a fact our current start-up environment is unstable and don’t get too invested in most new services.

Firefox was your web browser unless you were an idiot or were told by an idiot not to use it….or you were old. Prior to this you were likely using Netscape or Opera though the version of IE that came with Win2000 was ok too.

Though earlier broadband was pretty widespread dial-up was more common just due to cost. AOL was still the biggest ISP, but was on a small downward trajectory. However this was mostly due to customer poaching by low cost providers like NetZero.

Windows XP was king but after dropping OS9 in 1999 Mac was beginning to show signs of life with products like OSX and the iBook. As such Wifi was becoming far more common and seeing people just out and about on their laptop was no longer limited to the business district.

Myspace launched in 2003 and as time went they poached people from earlier services like Livejournal and Xanga, though at least to begin with services like Tribe.net made it hard for Myspace to become the undisputed king of social.

Google was king but just barely with about 54% marketshare (today its around 70%). There were still several alternatives in Yahoo, MSN (separate at the time), AOL, Terra/Lycos, AltVista, and AskJeeves. I don’t think I started using Google heavily until after 2005.

Aim, everyone used Aim. Most people had an Aol account solely to use Aim. This is unless you were that one or two people that everyone knew who preferred Yahoo or MSN chat…and everyone hated you for it. Also in a chat related thing EVERYONE wanted a Sidekick, it was the lone reason

T-moble made it out of the first decade of the 2000’s.

No Youtube, videos were pretty uncommon. The web was still text heavy. This is why when Myspace allowed users to post music to their personal page it was mind blowing.

Geocities and like services such as Angelfire were very popular but not as universal as social networking sites would become. Most people knew at least a couple people who had pages on networks like this they were constantly being pestered to visit via email.

File sharing on the likes of Napster and Gnutella was a big freaking deal. These were the early days too when the files you got were of great quality and not filled with internet herpes.

With file sharing music was plentiful and theoretically portable. But the idea everyone had an ipod is revisionist history. The ipod was initially Mac only and almost no one had a Mac. Once released on PC it took awhile for them to be in most peoples hands. Folks spent a lot of time downloading music and then burning it to Cd’s. In my college classes there were a couple Creative Nomad players and I had an Archos Jukebox, but we were the outliers. I got my first ipod in 2005, it was a Gen3…the first one available for PC. I got it on sale when the Gen 4 was announced in 2004.

You were tethered, not to your phone which you likely didn’t have…but your desk. You would come home from school and race to the computer to catch up on stuff, instant, all the time access was still a few years off for most people.

Online games started becoming popular. Games Domain became Yahoo! Games and companies like Blizzard which had been around became far more well known during this time with online offerings such as Battle.net.

Wikipedia launched in 2001 forever changing how people came into many forms of source materials. I immediately forgot all about Encarta.